Artificial Intelligence

Gemini Robotics : The generation of physical agents

Artificial intelligence is taking a decisive new step with the arrival of Gemini Robotics, a family of models designed not only to understand the world, but also to act in it autonomously. While classic language models remain confined to text-based exchanges, Gemini Robotics paves the way for a new era, that of physical AI agents capable of perceiving, reasoning, planning, and executing tasks in the real world. This breakthrough, driven by Google DeepMind and Google AI, marks a major turning point toward robots that are smarter, more adaptable… and above all, more useful in everyday life.

Two brains for a smart robot

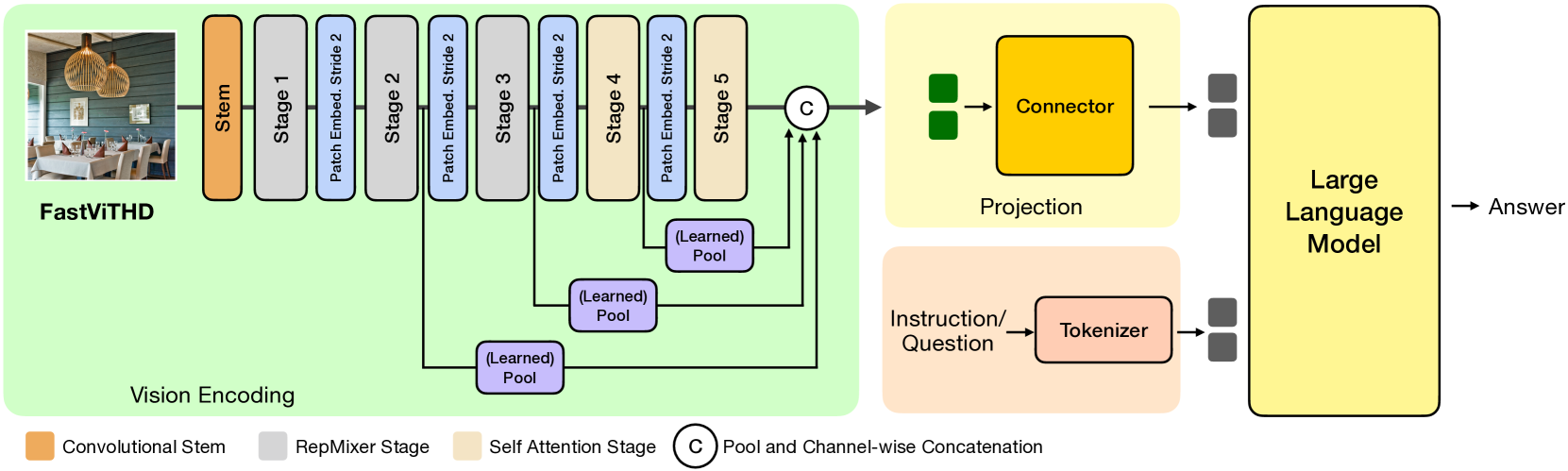

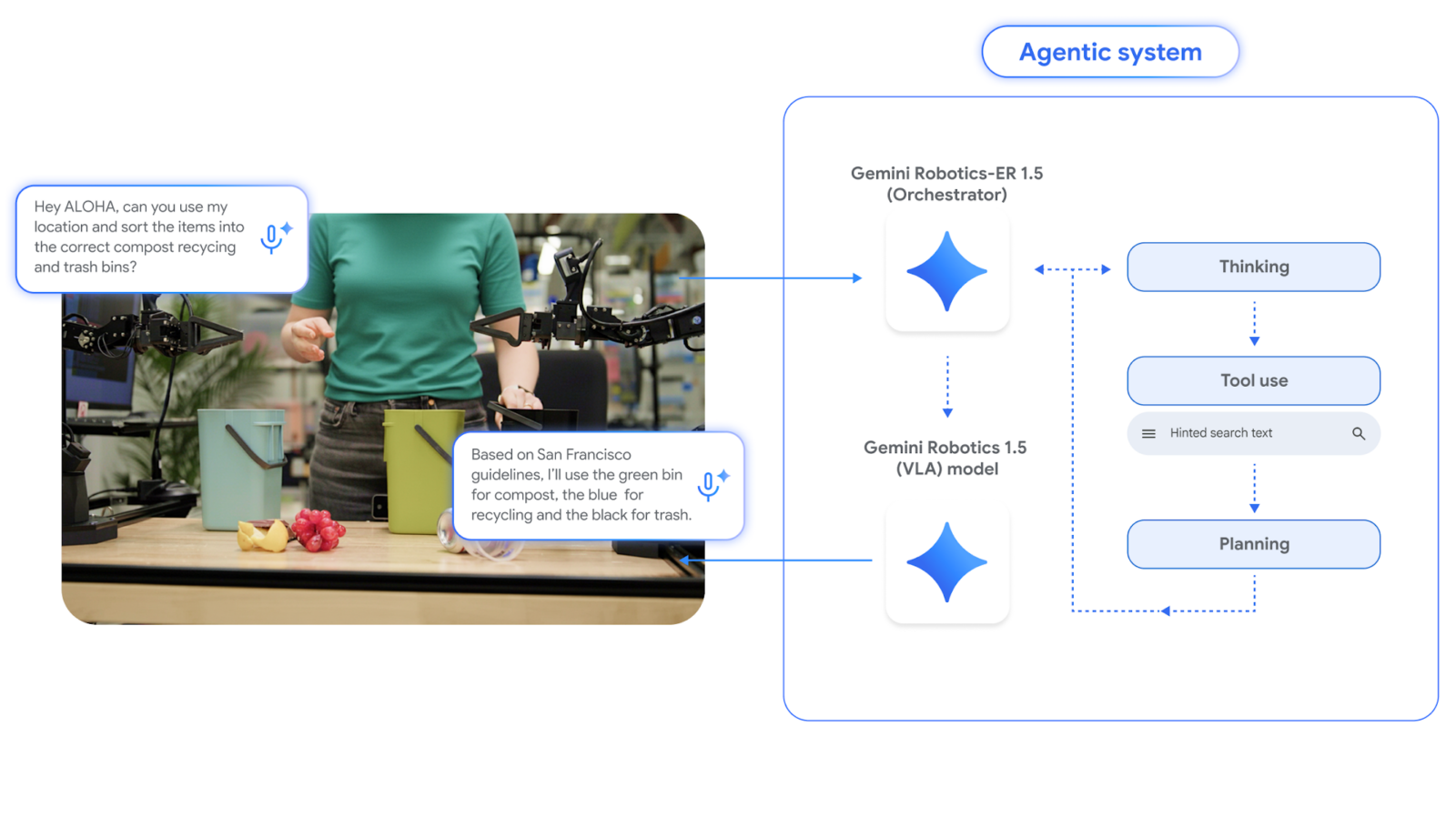

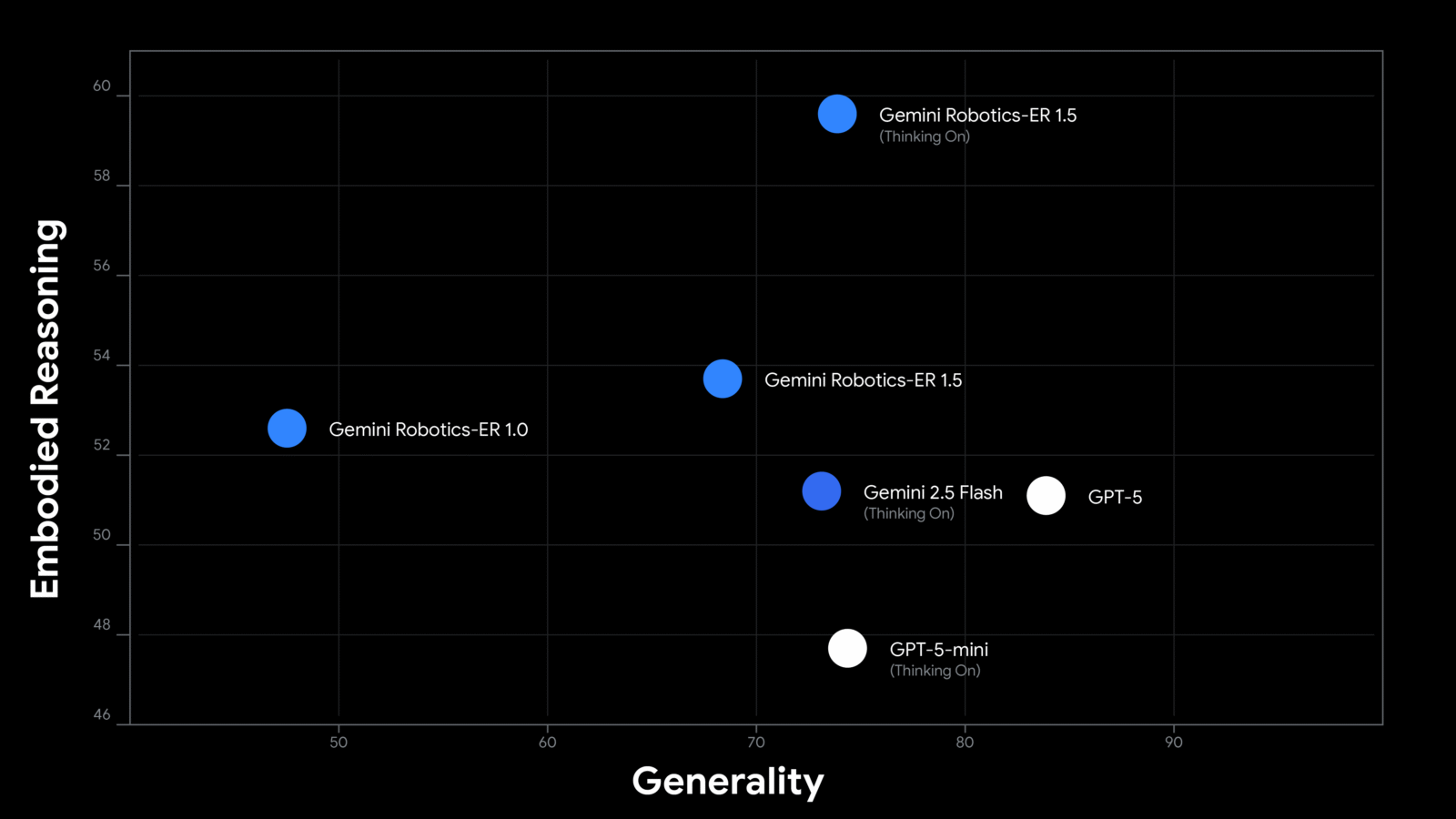

At the core of this revolution are two complementary models: Gemini Robotics-ER 1.5 and Gemini Robotics 1.5. The first, Gemini Robotics-ER 1.5, serves as the “strategic brain.” It is an embodied reasoning model specialized in spatial understanding, long-horizon planning, and calling external tools (such as a Google search to look up local waste-sorting rules). It understands natural-language instructions, breaks a complex task into simple steps, and orchestrates the entire process.

The second, Gemini Robotics 1.5, is a vision–language–action (VLA) model. It receives detailed instructions from the strategic brain and translates them into precise motor commands for the robot. This model doesn’t just execute, it also “thinks” before acting, generating an internal chain of reasoning that lets it adapt its movements to the situation. For example, when given the instruction “sort the laundry by color,” it understands not only what a color is, but also how to handle a red sweater gently and place it in the correct basket.

Spatial understanding and learning through embodiment

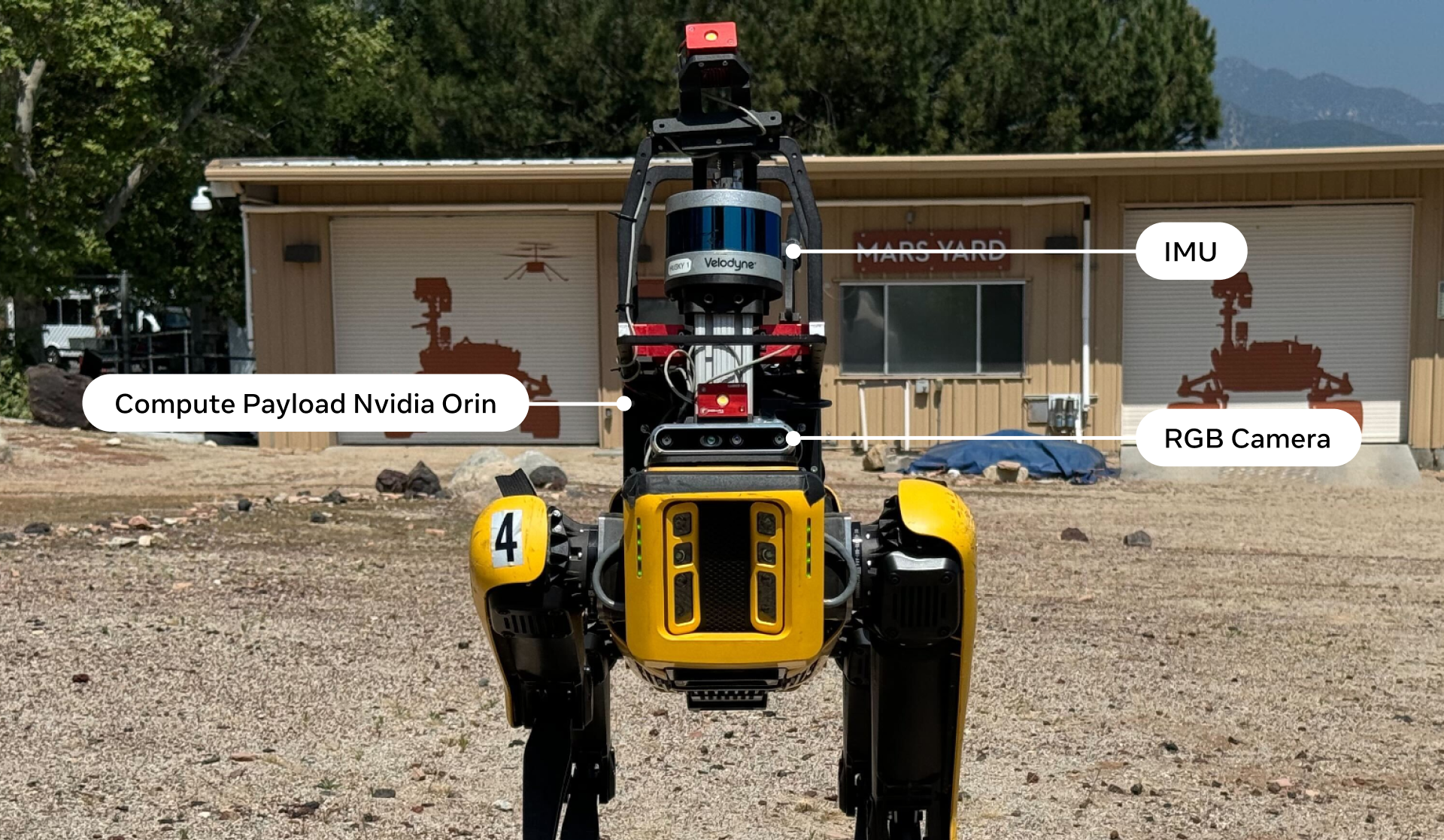

What sets Gemini Robotics apart from earlier approaches is its ability to combine fine-grained perception, temporal reasoning, and robust execution. Thanks to state-of-the-art spatial understanding, Gemini Robotics-ER 1.5 can precisely locate objects in an image (as normalized coordinates), identify their state (open/closed, full/empty), or even describe a sequence of actions in a video with exact temporal segmentation. These capabilities are essential for enabling the robot to understand not only what is there, but also what has happened and what it should do next.

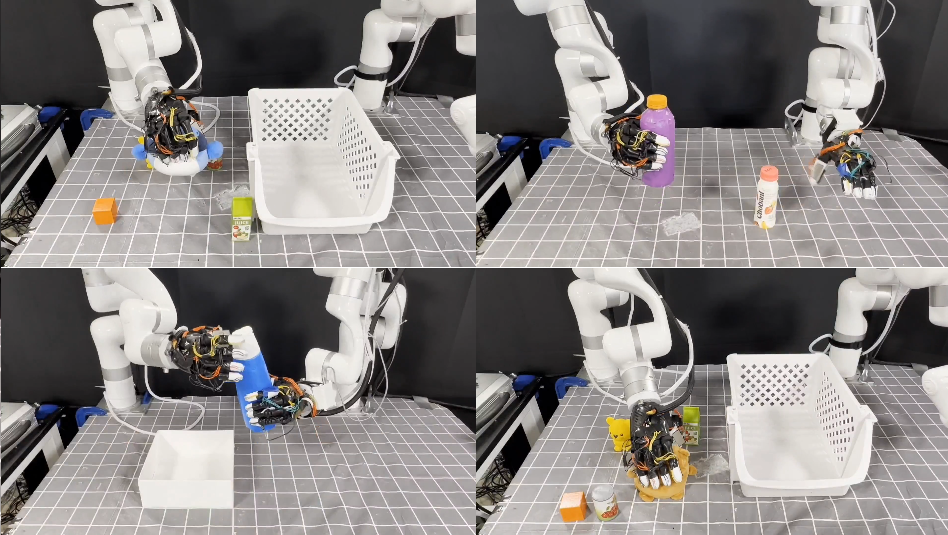

Another major innovation, Gemini Robotics 1.5 learns “through bodies.” This means it can transfer skills acquired on one type of robot (for example, an ALOHA 2 robotic arm) to a completely different robot (such as Apptronik’s Apollo humanoid) without any task-specific retraining [1]. This is known as learning through embodiment. Such generalization dramatically speeds up the development of new robotic behaviors and paves the way for more versatile systems.

Toward robots that are useful, responsible, and accessible

Google is already making Gemini Robotics-ER 1.5 available to developers through Google AI Studio and the Gemini API, in a preliminary version. This allows the community to start experimenting with this high-level “brain” to create physical agents capable of handling everyday tasks: tidying a table, sorting waste, making coffee, and so on. These scenarios, simple on the surface, actually require a subtle blend of perception, contextual reasoning, and motor coordination, exactly what Gemini Robotics now makes possible.

Of course, bringing AI agents into the physical world raises safety questions. Google emphasizes a “layered” approach, combining software filters, semantic risk reasoning, and hardware safety systems (such as emergency stops). The model has also been evaluated on an improved version of the ASIMOV benchmark, which is dedicated to semantic safety in robotics.

The era of physical AI agents

Gemini Robotics doesn’t just improve robot performance, it redefines their role. Instead of simple, preprogrammed executors, we are entering the era of autonomous physical AI agents capable of understanding complex human intentions and responding adaptively. In the longer term, this technology could transform entire sectors, logistics, in-home assistance, industrial maintenance, while bringing AI closer to our tangible, everyday lives.

[1] Gemini Robotics 1.5 brings AI agents into the physical world

[2] Building the Next Generation of Physical Agents with Gemini Robotics-ER 1.5

Gemini Robotics Team, Abeyruwan, S., Ainslie, J., Alayrac, J.-B., Gonzalez Arenas, M., Armstrong, T., Balakrishna, A., Baruch, R., Bauza, M., Blokzijl, M., Bohez, S., Bousmalis, K., Brohan, A., Buschmann, T., Byravan, A., Cabi, S., Caluwaerts, K., Casarini, F., Chang, O., … Zhou, Y. (2025). Gemini Robotics: Bringing AI into the Physical World. arXiv.