Research

MedGemma 1.5 and MedASR: Google Redefines Open-Source, Multimodal Medical AI

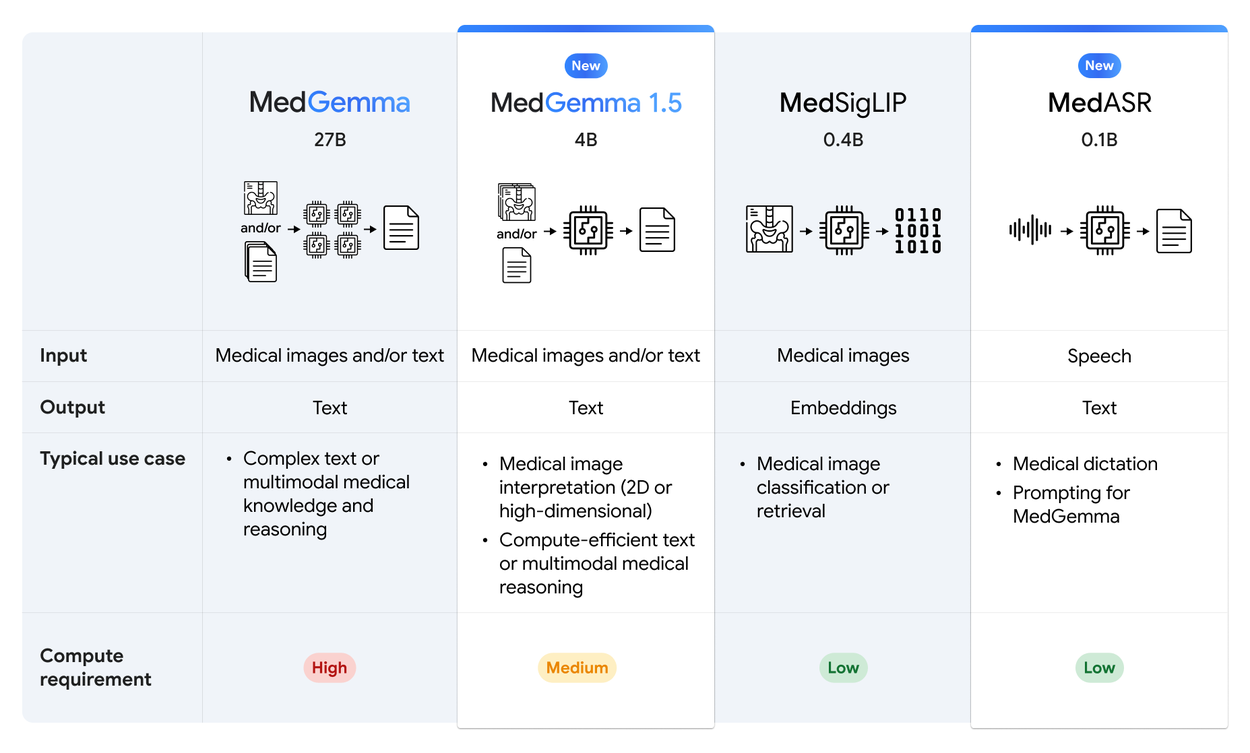

MedGemma 1.5 and MedASR from Google: an open-source revolution in medical AI.

The healthcare sector is undergoing unprecedented technological transformation. As we enter 2026, Google Research has just delivered a major breakthrough in the open-source world with a significant update to its medical AI suite. If you’re a developer, data scientist, or simply passionate about the intersection of code and medicine, what follows will greatly interest you. We break down for you the launch of MedGemma 1.5 and the brand-new MedASR.

A New Era for AI in Healthcare

Artificial intelligence is no longer confined to theoretical promises in medicine, it has entered an unprecedented phase of industrialization and democratization. With this latest wave of announcements, Google reaffirms its commitment to providing robust, open-source software building blocks. This strategy aims to bridge the gap between the immense computational power of tech giants and the real-world needs of frontline healthcare practitioners, equipping developers with the tools necessary to build the medicine of tomorrow.

Accelerating Technological Adoption

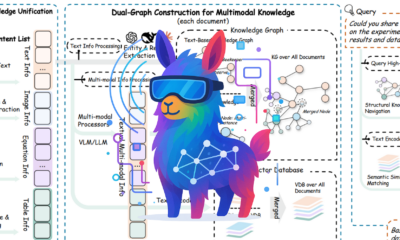

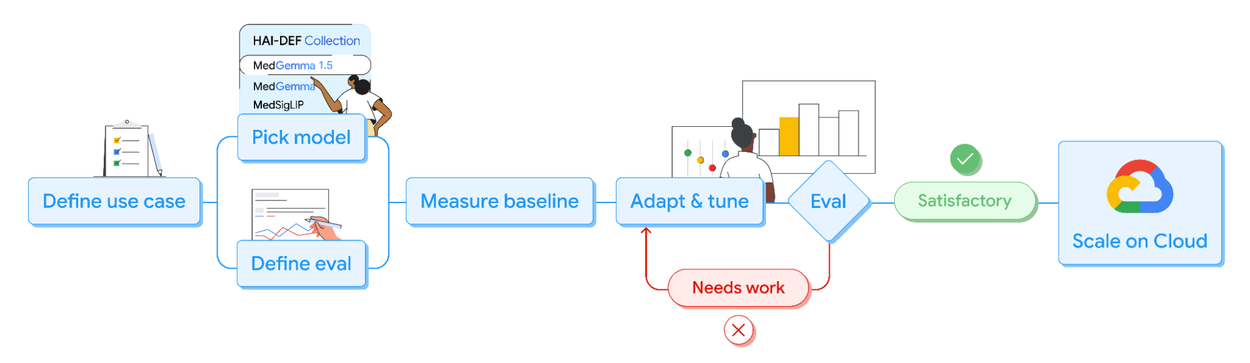

AI is no longer just making headlines in the tech world—it is now positively transforming the healthcare sector at scale. According to the latest data, the healthcare industry is currently adopting AI at a rate twice as fast as the global economy. To support this transformation, Google has consolidated its strategy around the Health AI Developer Foundations (HAI-DEF) program.

The goal is clear: not to keep the most powerful tools locked away in research labs, but to place them directly into the hands of the broader developer community. Models like MedGemma are not designed as rigid, finished products, but rather as foundational components—“starting points”—that developers can evaluate, adapt, and specialize for specific clinical use cases.

Unified Architecture and Accessibility

What makes this announcement particularly exciting for us developers is the strong emphasis on technical accessibility. Google has clearly understood that raw computational power is useless if it can’t be practically deployed. These new models are immediately available through platforms we use daily, such as Hugging Face and Kaggle, and are also seamlessly integrated for industrial-scale deployment on Google Cloud via Vertex AI.

This “developer-first” approach drastically reduces the friction between cutting-edge academic research and the development of real-world applications, whether for MedTech startups or large hospital systems looking to modernize their software infrastructure.

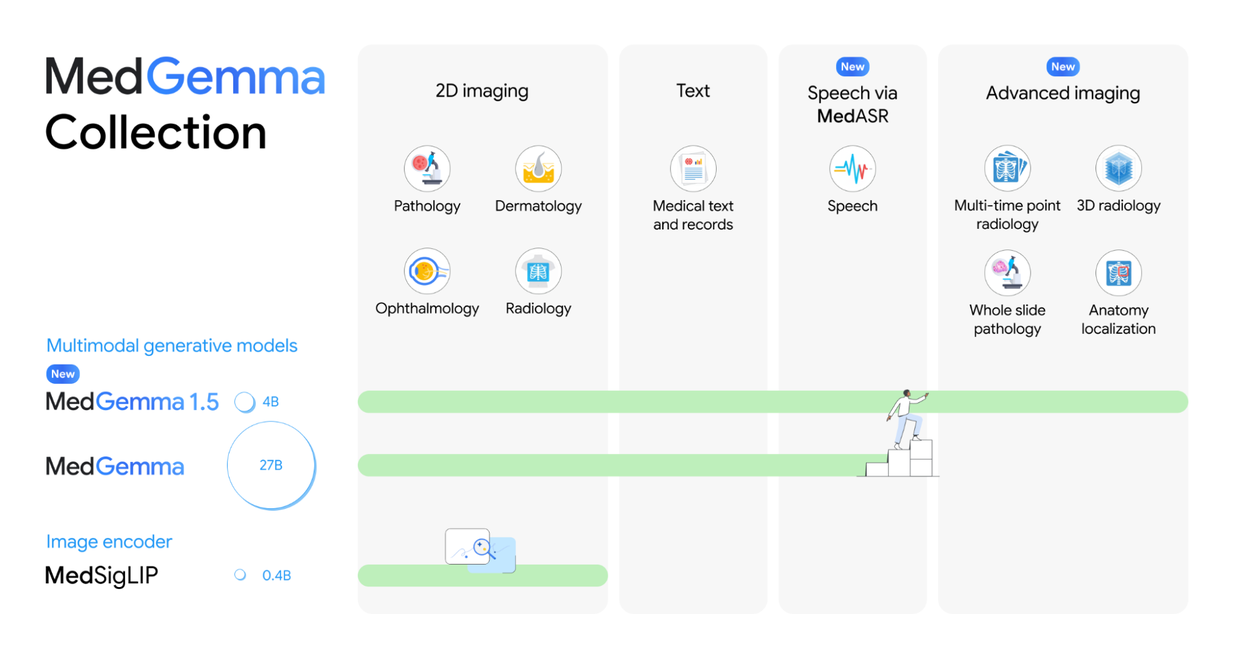

MedGemma 1.5: Beyond 2D Imaging

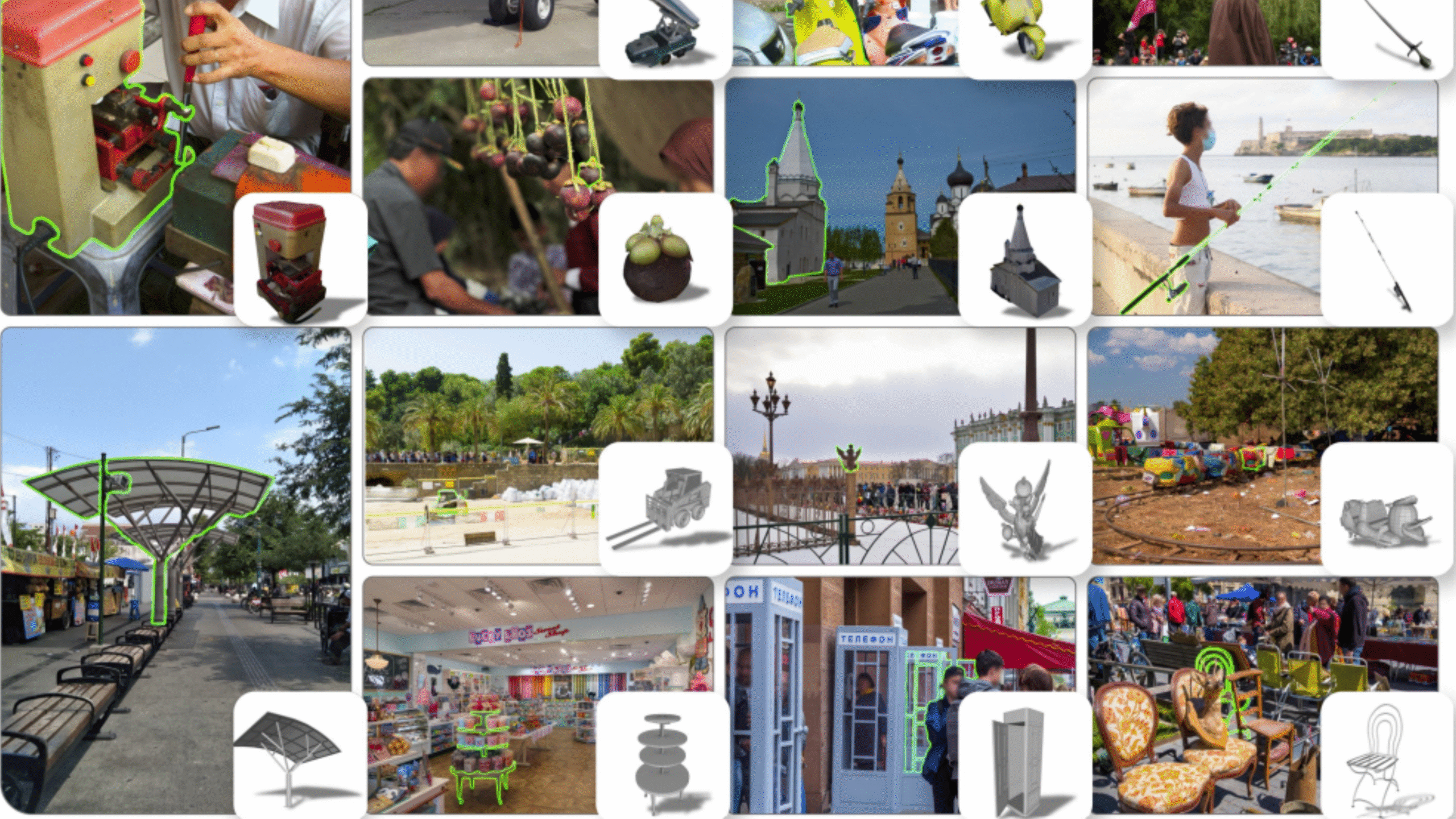

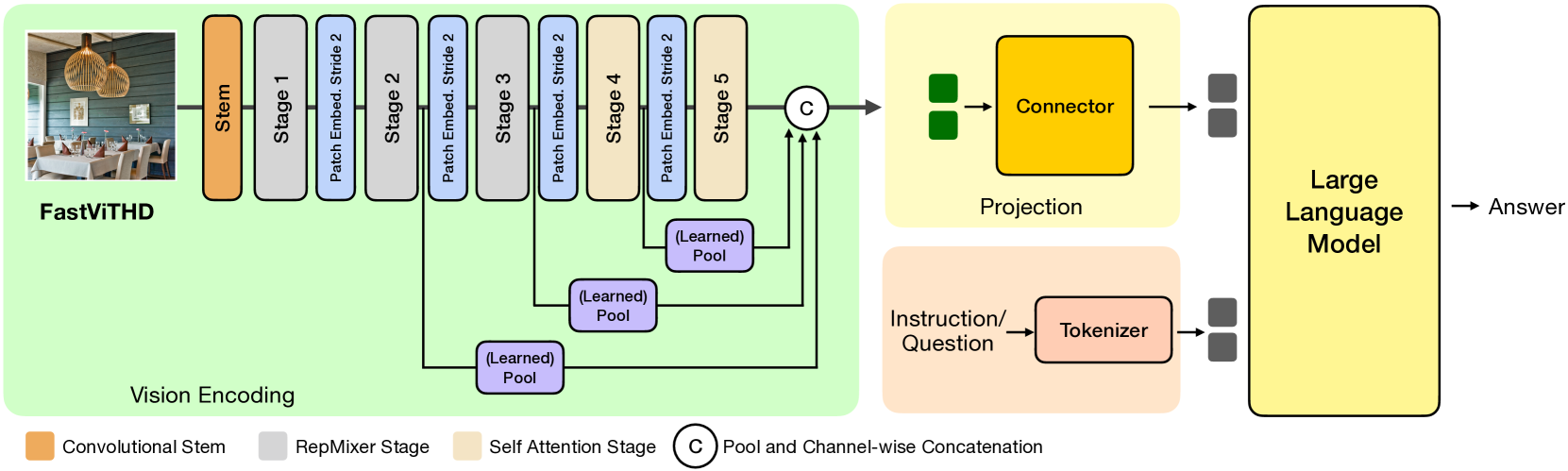

While the previous version of MedGemma had already demonstrated strong performance in analyzing standard X-rays, modern medicine is far too complex to be limited to static, two-dimensional images. The human body is a dynamic, three-dimensional structure that evolves over time. This is precisely the challenge MedGemma 1.5 tackles, breaking through the barrier of “true” multimodality by integrating spatial understanding (3D) and temporal analysis, thereby bringing AI closer to the real-world perception of an expert radiologist.

Handling 3D Volumetric Imaging

The true technological breakthrough of MedGemma 1.5 lies in its ability to “see” in three dimensions. Until now, most multimodal models were limited to analyzing flat, 2D images, such as conventional X-rays. MedGemma 1.5 shatters this limitation by natively supporting high-dimensional medical imaging, including computed tomography (CT scans) and magnetic resonance imaging (MRI).

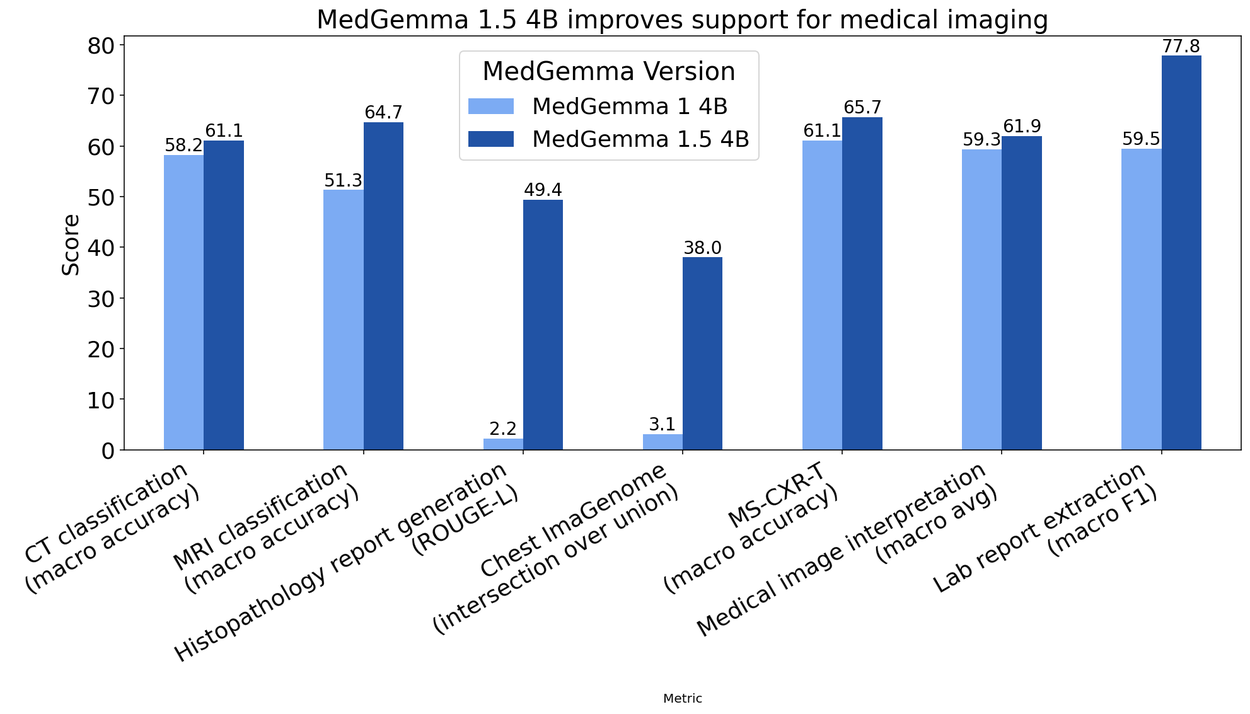

So, how does this actually work under the hood? The model doesn’t just process a single image, it ingests multiple “slices” or patches from a 3D volume, accompanied by a textual prompt describing the task. This enables the AI to understand the spatial structure of an organ or lesion, something a standard 2D image would completely miss due to its lack of depth perception. On internal benchmarks, this approach has boosted accuracy by 14% in MRI result classification compared to the previous version, a massive qualitative leap for complex diagnostic support.

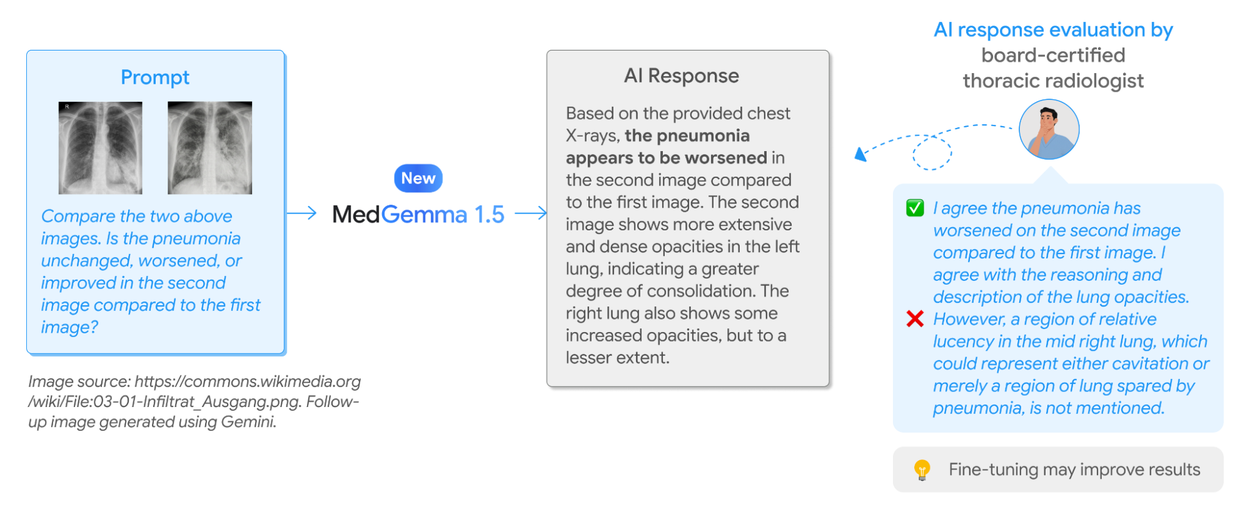

Longitudinal and Temporal Analysis

In medicine, a patient’s condition at a single point in time (T) matters, but their clinical trajectory matters even more. This is where another major innovation comes in: longitudinal medical imaging. MedGemma 1.5 can now analyze time-series data, such as comparing chest X-rays taken at different points in time.

This allows the AI to determine whether a condition, like pneumonia or a tumor, has worsened, remained stable, or resolved. In testing, this ability to contextualize images over time improved accuracy by 5% on the MS-CXR-T benchmark. For developers, this opens the door to building intelligent assistants capable of auto-generating follow-up reports, automatically highlighting subtle changes that a fatigued clinician might overlook after hours on shift.

Optimized 4B Model

It’s often tempting to assume that “bigger is better” when it comes to large language models. Yet Google has made the strategic decision to release an optimized version with 4 billion parameters (4B). Why is this great news? Because a model of this size strikes the perfect balance between performance and computational efficiency.

This model is lightweight enough to run on modest infrastructure, and potentially even in offline (edge computing) environments, making it ideal for safeguarding sensitive hospital data or operating in areas with limited connectivity. Despite its compact size, MedGemma 1.5 4B outperforms its predecessor not only in medical imaging but also in pure textual reasoning, achieving a 5% improvement on the MedQA benchmark and a remarkable 22% gain on electronic health record–based question answering (EHRQA).

MedASR: Specialized Speech Recognition

In the fast-paced environment of a hospital ward, typing on a keyboard is often a bottleneck to clinical efficiency. Voice remains the most natural and fastest interface for physicians, whether dictating a clinical note or conversing with a patient. However, general-purpose speech recognition systems frequently fail when confronted with the complexity of medical jargon. To address this gap, Google introduces a cornerstone of its medical AI ecosystem: a dedicated model capable of accurately transcribing clinical terminology with high precision.

High-Precision Medical Transcription

Text may be king, but speech remains the natural communication mode for clinicians. Google is therefore introducing MedASR, an automatic speech recognition (ASR) model specifically fine-tuned for the medical domain. If you’ve ever tried using general-purpose ASR systems to transcribe a medical dictation filled with Latin terms and technical abbreviations, you know the results are often disastrous.

The numbers speak for themselves: compared to Whisper (OpenAI’s widely used general-purpose model), MedASR reduces the word error rate (WER) by 58% on chest X-ray dictations, dropping from 12.5% to just 5.2%. On an internal benchmark covering diverse medical specialties and accents, error reduction reaches as high as 82%. For developers building voice-based clinical documentation tools, switching to MedASR is essential to achieve clinically acceptable reliability.

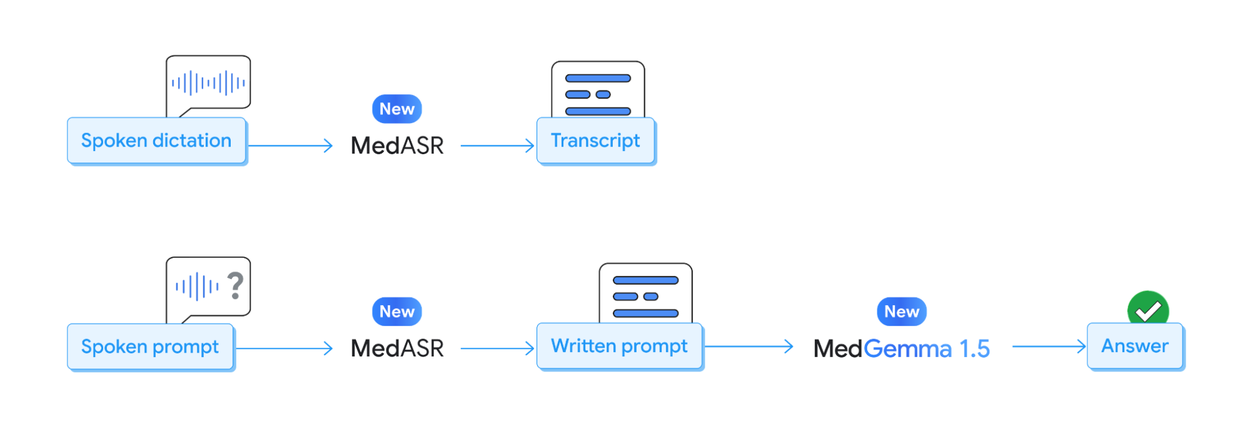

Multimodal Audio-Text Synergy

The true power of this ecosystem emerges when its components are combined. MedASR isn’t just a passive transcription tool, it becomes a natural input interface for MedGemma. Imagine a workflow where a radiologist speaks observations aloud or asks a complex question verbally.

MedASR converts that speech into highly accurate, structured text, which is then instantly fed into MedGemma for clinical reasoning or associated image analysis. Google even provides tutorial notebooks to help developers build these multimodal pipelines. This represents a giant leap toward conversational AI assistants that can dramatically streamline both administrative and clinical tasks.

Community Initiatives and Competition

To further accelerate innovation, Google isn’t just releasing code, they’re launching the MedGemma Impact Challenge, a Kaggle-hosted hackathon with $100,000 in prizes. This is a direct call to our developer community: take these tools, get creative, and build tomorrow’s solutions. Whether you’re a seasoned engineer or a curious student, this is the perfect opportunity to get hands-on and apply these new capabilities to real-world healthcare challenges.

Ecosystem, Integration, and Impact

No matter how powerful an AI model is, it’s useless if it remains isolated in a research lab. The true value of MedGemma and MedASR lies in their ability to integrate seamlessly into existing developer workflows and hospital systems. From cloud infrastructure to standard medical imaging formats, Google has designed these tools to be deployable, adaptable, and scalable.

Technical Deployment and DICOM Support

For these models to be truly useful, they must work with established healthcare standards. Google has made a significant effort to include full support for DICOM (Digital Imaging and Communications in Medicine)—the universal standard for storing and transmitting medical images.

Applications deployed on Google Cloud now benefit from native DICOM compatibility, enabling seamless ingestion of data directly from CT scanners and MRI machines without cumbersome format conversions or loss of critical metadata. Moreover, developers can fine-tune these models on their own datasets using efficient techniques like LoRA (Low-Rank Adaptation), making it fast and computationally affordable to adapt the models to niche specialties such as dermatology or ophthalmology.

Real-World Application Examples

Far from theoretical, these models are already in action. Google’s report highlights the work of Qmed Asia, a Malaysian startup that adapted MedGemma to create a conversational interface capable of navigating over 150 clinical practice guidelines. Physicians can now query these complex knowledge bases in natural language to receive instant, context-aware decision support.

Ethical Framework and Technical Limitations

Integrating AI into clinical workflows is a fascinating advancement, but it comes with immense responsibility. Unlike generating code or artistic images, an error in medical AI can have critical human consequences. It is therefore essential to approach these technologies with clear-eyed awareness, fully understanding their safeguards, the rigorous handling of sensitive data, and the central role that human oversight must retain in validating results.

Data Privacy and Security

As technologists, we must also be stewards of ethics. Google emphasizes that its models were trained on a carefully curated mix of public and private datasets that underwent strict identification procedures. Patient privacy remains the absolute priority. Anyone deploying applications based on these models must uphold these high standards, ensuring that all derived systems comply with established security protocols and data anonymization practices.

Need for Clinical Validation

Finally, it is crucial to reiterate the essential disclaimer accompanying these tools: MedGemma and MedASR are assistive technologies, not replacements for clinicians. They are provided as foundational building blocks for development and must not be used for direct clinical diagnosis without thorough, context-specific validation by qualified healthcare professionals.

Hallucinations and errors remain possible. Any system built on these models must incorporate a human-in-the-loop verification step. AI suggests, the clinician decides. It is by embracing this philosophy that we can develop tools that are not only powerful but also safe, trustworthy, and truly beneficial for the future of medicine.

Yang, L., & al. (2025). Medgemma Technical Report. Arxiv. https://arxiv.org/abs/2507.05201