Artificial Intelligence

Reward model: What is that ?

Since the rise of the great models of language, a technical term often comes up in discussions between experts : reward model. Behind the name a bit of a mystery lies a key element which, in many cases, using AI to better understand what we consider to be a “right” answer.

Its mission is always the same, give a rating or a score to an outcome, to guide the learning. However, it is not mandatory. Some systems AI work very well without him, relying on explicit rules or measurable goals. Understand what is a reward model, how it’s used and in what cases it is used, it is lifting the veil on one of the most influential and sometimes the most misunderstood of artificial intelligence modern.

What is a template reward ?

A model reward is a model that can be used to evaluate an action or output produced by another model, and then assigning a score. This score represents how much the output corresponds to a given purpose : for example, a clear answer and correct in a chatbot, a successful move to a robot, or an image faithful to a description to an image generator. In practical terms, it acts as a “judge” who will measure the quality of a result , according to defined criteria, sometimes inspired directly from human preferences.

In the context of large language models (LLM) as ChatGPT, the model reward is often used in the context of the RLHF (Reinforcement Learning from Human Feedback). It shows the human evaluators are several possible answers to a single question, and then we record their preference. These data are used to train the model reward, which learns to predict what a human would consider better. This judge virtual can then guide the LLM to behavior that is more useful, more accurate and more secure.

How does the model reward ?

The operation of a model reward is based on a simple idea : learn to note, automatically the quality of a result. For this, we first establish a set of data where each instance is associated with a score or preference. In the case of an LLM, these data are often the result of the comparisons made by human evaluators. It shows two or more answers to the same question, and they indicate which one is the best. The model reward learns to predict what human choice from the provided text.

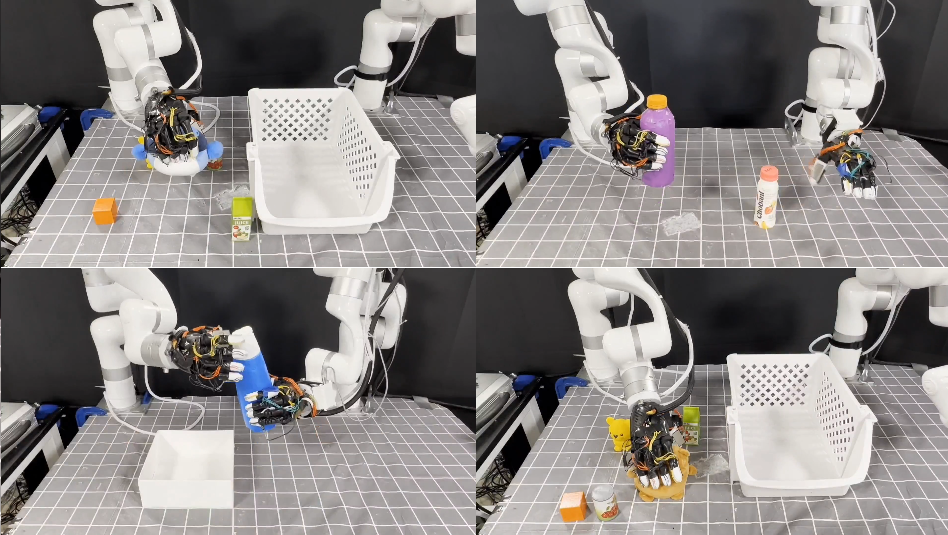

Once trained, the model can be used to guide the learning of the main model through a process such as the RLHF. The LLM generates more responses, the model reward evaluates it, and an algorithm of reinforcement learning adjusts the LLM to maximize the “reward” average obtained. Outside of the LLM, the principle is similar : in robotics the reward model note the success of an action (for example, to catch an object), whereas in a video game, it determines if a movement closer to the player the victory. In all cases, it acts as a feedback system that transforms a vague objective in a clear signal to guide the AI.

When to use a model reward ?

The models reward are valuable when it comes to transforming subjective criteria or ill-defined, for example, the clarity of a text, the cosiness of a tone or the aesthetics of an image in a teach signal to measure. They are also useful in complex environments where explain each rule would be impossible, or when the expectations are evolving rapidly, and that it is necessary to re-train the system without starting from scratch. In contrast, when an automatic metric already provides a reliable measure, or that the computational and latency are strict, the addition of such a “judge” may be superfluous.