Artificial Intelligence

DINOv2 at NASA : Global Vision

Small rovers able to “see” and understand their environment as great : this is the idea behind DINOv2 at the NASA. Jet Propulsion Laboratory (JPL) is based on this model of vision to give robots a perception of more reliable, a prerequisite to move, to identify areas of interest and prioritise the observations far away from the Earth, where the connection is rare, and the land is unknown. The initiative illustrates the encounter between models foundation of vision and planetary exploration, with the goal of increasing scientific autonomy on the field.

DINOv2, a general-purpose visual language model for space.

Created by Meta-I, DINOv2 learns without annotations human and produces visual representations of the universal can be used for multiple tasks, with the recognition of scenes in the segmentation or the estimate of the depth. In other words, the model provides a “visual language” in common, which is relevant when the setting changes, a major advantage for destinations such as the Moon or Mars, where the training data are, by definition, limited. The authors show that the self-supervision on a large scale on the varied images is enough to get features robust, high-performance without adjustment heavy and able to transfer from one context to another. For planetary exploration, DINOv2 ticks the boxes of generalization and simplicity in annotated data.

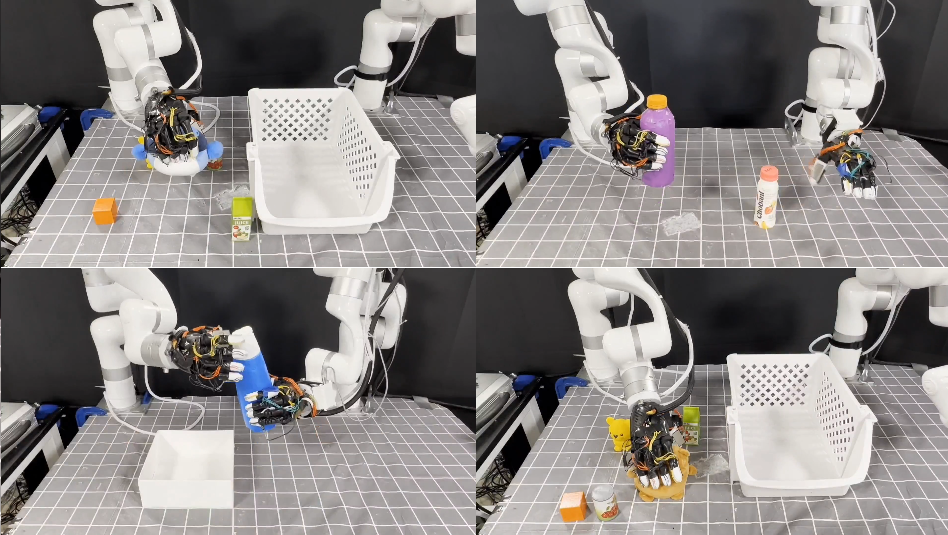

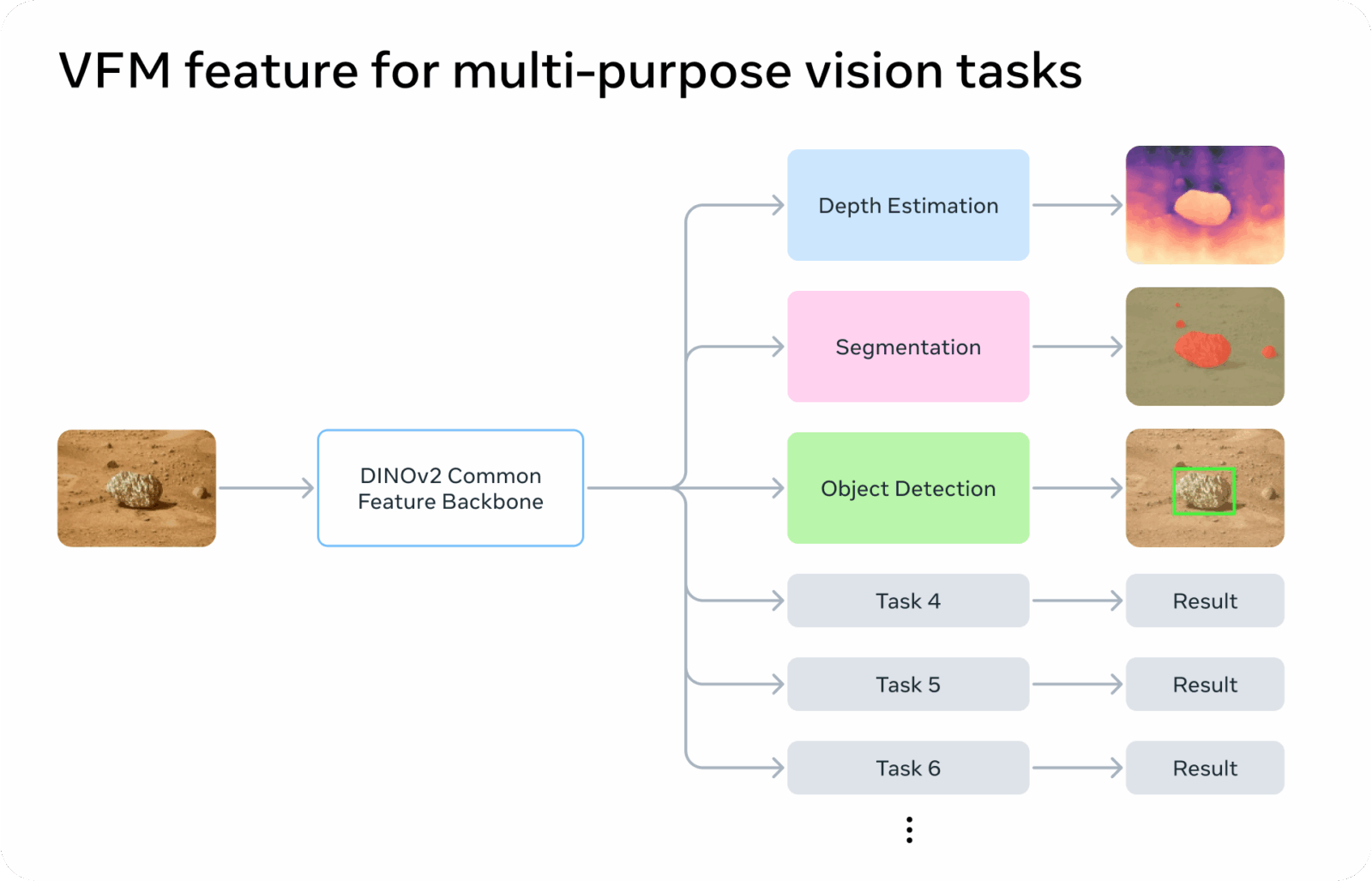

JPL is putting in place an engine of perception unified

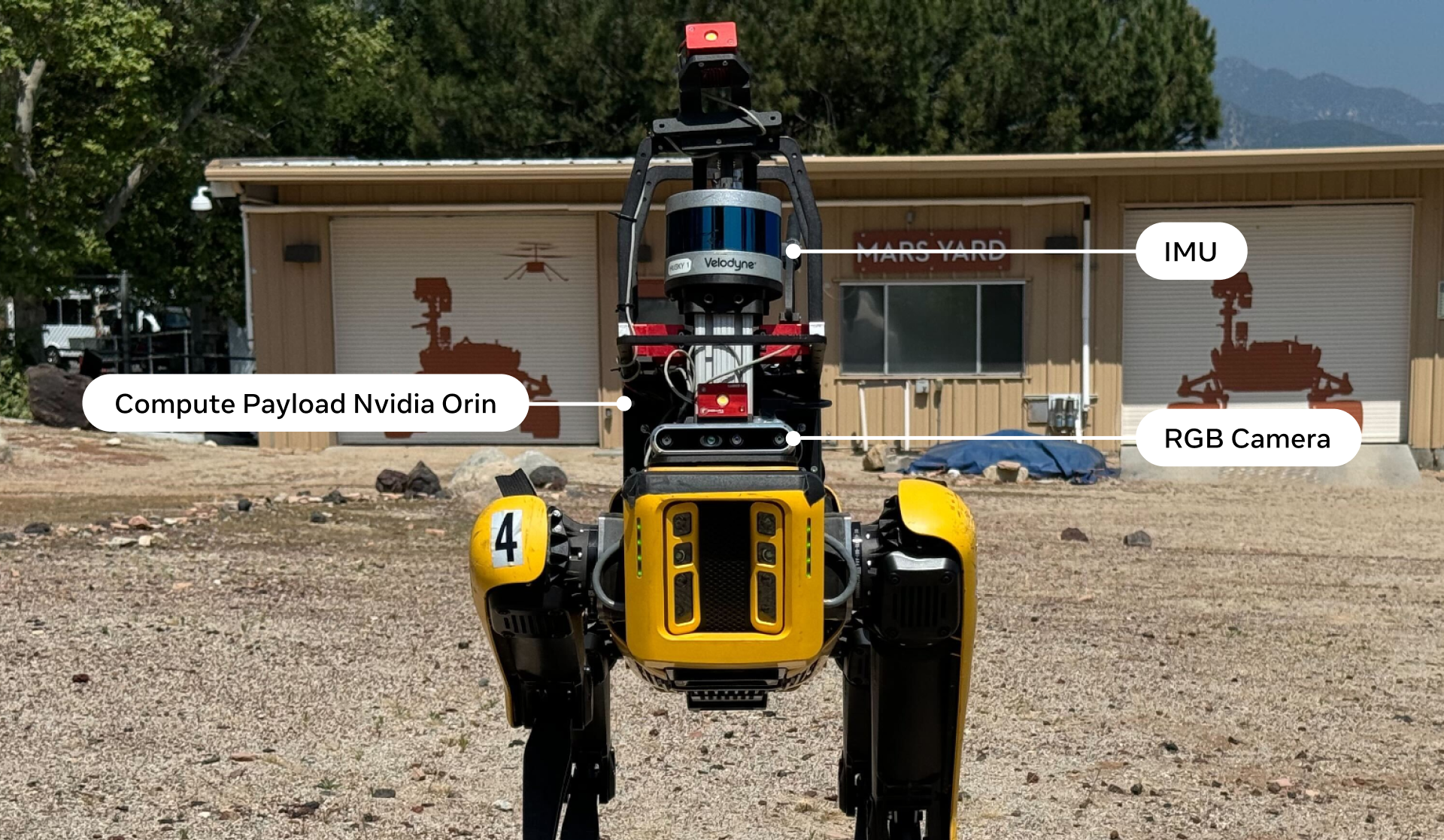

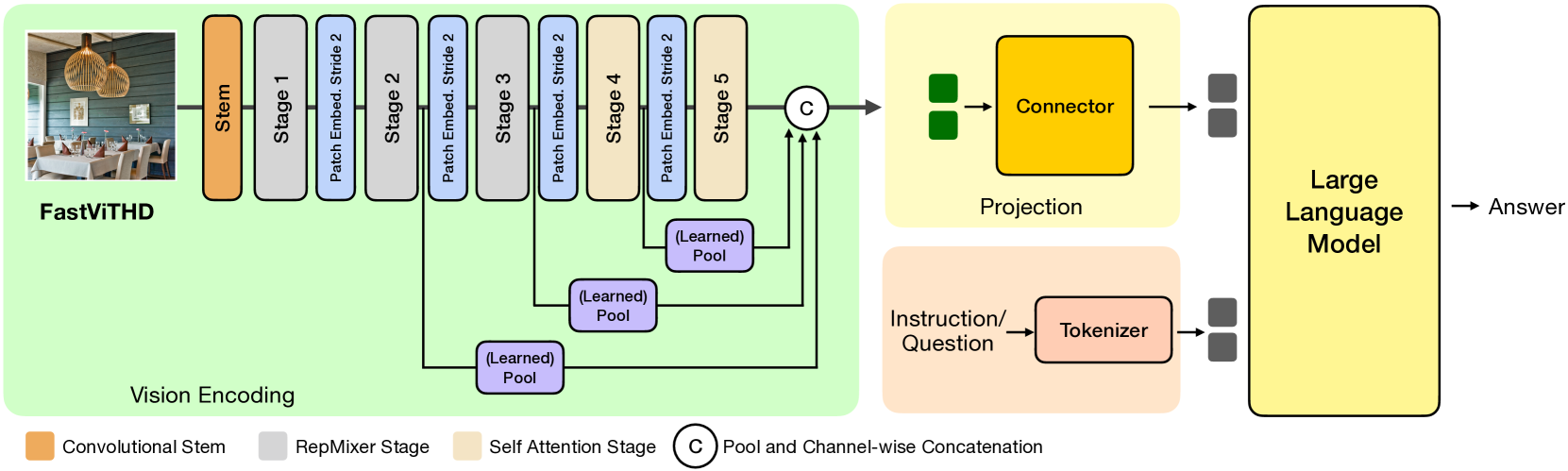

To exploit DINOv2 robots real, JPL has designed the Visual Perception Engine, a software framework that connects a model foundation (DINOv2) to several “heads” specialty. From a single camera image, the system extracts the visual features with DINOv2, and then triggers modules, each produce a useful output in the mission : depth monocular to understand the geometry of the scene, segmentation semantics to distinguish soil, rocks or sky, and detection of objects to identify targets. This approach factorized avoids duplicating the effort of analysis and simplifies the maintenance of one mission to another.

JPL diffuse this engine under license open source and provides an integration ROS 2 for the ecosystem robotics. For the teams who develop rovers, drones, or experimental platforms, this means that it becomes possible to reuse DINOv2 as common brick collection and add (or replace) the heads according to the scientific need, without rewriting the entire stack. The openness of the code and the documentation also facilitates the audit and in the adoption by the community.

A real breakthrough for planetary exploration

On another planet, the communication latency imposes to embed more intelligence to the edge. With DINOv2 at NASA, the perception becomes more robust in the face of variations in lighting, texture and environment, which improves the autonomous navigation and reduces the risk of deadlock. Especially, the uniting around a common core visual allows you to align the prioritization scientific field, a robot can decide quickly where to go, what to look for, and when to send a summary to the teams on the ground.

This evolution is part of a trend of AI. The use of models foundation in the vision as the basis for embedded systems. What is at stake here than March. The same principles apply for the robotics terrestrial, inspection of infrastructure, agriculture, rescue, where the connectivity is uncertain, and the scenes are constantly changing. DINOv2, already proven in research, became a brick industrial credible for field applications demanding, with a clear path to the proof of concept operational.